|

From “A

Brief History of the Calculator” by Natalia Koval and

Max Whitacre:

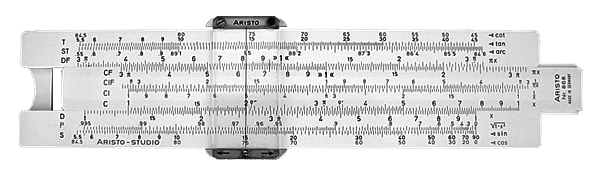

The

Mechanical Calculator

|

The mechanical calculator was a 17th century

mechanical device used to automatically perform

the basic operations of arithmetic. The first of

these mechanical calculators was designed by

Wilhelm Schickard in 1642. Mechanical calculators

were produced until the 1970's, but they were most

popular in the late '40s and early '50s-- the

hand-held and portable Curta mechanical calculator

was developed around then.

|

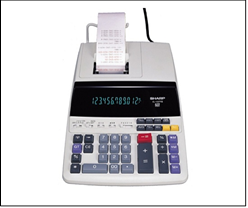

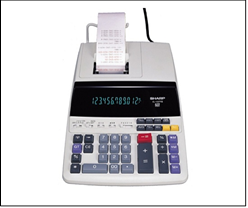

The Electronic Calculator

|

Although the first electronic calculator was

developed by the Casio Computer Computer Company in

1957, the first handheld electronic calculators were

not developed until the design of the "Cal Tech"

prototype in 1967 by Texas Instruments. Calculators

became more common in the 1970s. By 1970, a

calculator could be made using just a few chips of

low power consumption, allowing portable models

powered from rechargeable batteries. The first

portable calculators appeared in Japan in 1970, and

were soon marketed around the world. |

Programmable & Graphing Calculators

|

Programmable calculators were first introduced by

Mathatronics and Casio in the mid-1960s; however,

these machines were very heavy and expensive. In the

Soviet Union, desktop programmable calculators had

been introduced in the '70s, but the first pocket

programmable calculator (the Elektronika B3-21) was

developed around the end of 1977. Despite its

limited capabilities, a hacker culture surfaced

around these Elektronika calculators-- adventure

games and wide libraries of calculus-related

functions for engineers were programmed by these

hackers. A similar hacker culture surfaced around

the HP-34 (an American calculator). Graphing

calculators were first introduced in 1985 by Casio.

Now, graphing calculators like the TI-Nspire are

capable of not only graphing, evaluation of

arithmetic expressions, evaluation of probability,

solving equations for you, and a wide variety of

other mathematical, geometric, and trigonometric

operations-- they are programmable, and have their

own operating systems that are released

periodically. |

The mechanical calculator was limited, at first, to

addition and subtraction. Later models incorporated

multiplication and division. However, they were somewhat

difficult to operate and slow. They were also constrained

by the precision (the number of the decimal places before

and after the decimal point) that they produced, but the

answers were accurate. Given these limitations, the

mechanical calculator was used for only the most basic of

computations in engineering.

The electronic calculator resolved the problems of the

slide ruler and mechanical calculator and was a great boon

to engineers. The electronic calculator also allowed for

greater mathematical operations, and later for simple

programming operations. They still had a limitation on

precision (based on the circuitry used to perform the

calculation) but they had much greater precision than a

slide ruler or mechanical calculator. As a result,

engineers could now design for smaller tolerances, which

reduced the size and weight, led to greater efficiencies,

and reduced the costs of the product. More information can

be found on the Wikipedia Article on Calculators.

Computers

Mainframe

Computers

|

Mainframe computers (colloquially

referred to as "big iron") are computers used

primarily by large organizations for critical

applications, bulk data processing, such as

census, industry and consumer statistics,

enterprise resource planning, and transaction

processing.

The term originally referred to the large

cabinets called "main frames" that housed the

central processing unit and main memory of early

computers. Later, the term was used to distinguish

high-end commercial machines from less powerful

units. Most large-scale computer system

architectures were established in the 1960s but

continue to evolve.

The initial manufactures of mainframe computers

were IBM, Burroughs, Univac, Sperry-Rand, and GE.

|

Minicomputers

|

A minicomputer, or colloquially

mini, is a class of smaller computers that was

developed in the mid-1960s and sold for much less

than mainframe and mid-size computers from IBM and

its direct competitors. In a 1970 survey, the New

York Times suggested a consensus definition of a

minicomputer as a machine costing less than

US$25,000, with an input-output device such as a

teleprinter and at least four thousand words of

memory, that is capable of running programs in a

higher-level language, such as Fortran or BASIC. The

class formed a distinct group with its own software

architectures and operating systems. Minis were

designed for control, instrumentation, human

interaction, and communication switching as distinct

from calculation and record keeping. Many were sold

indirectly to original equipment manufacturers

(OEMs) for final end use application. During the

two-decade lifetime of the minicomputer class

(1965–1985), almost 100 companies formed and only a

half dozen remained.

When single-chip CPU microprocessors appeared,

beginning with the Intel 4004 in 1971, the term

"minicomputer" came to mean a machine that lies in

the middle range of the computing spectrum, in

between the smallest mainframe computers and the

microcomputers. The term "minicomputer" is little

used today; the contemporary term for this class

of system is "midrange computer", such as the

higher-end SPARC, Power Architecture and

Itanium-based systems from Oracle, IBM and

Hewlett-Packard.

The initial manufactures of minicomputers were

Digital Equipment Corporation (DEC), Data General,

Prime, Computervision, Honeywell and Wang

Laboratories |

Workstation Computers

|

A workstation is a special

computer designed for technical or scientific

applications. Intended primarily to be used by one

person at a time, they are commonly connected to a

local area network and run multi-user operating

systems. The term workstation has also been used

loosely to refer to everything from a mainframe

computer terminal to a PC connected to a network,

but the most common form refers to the group of

hardware offered by several current and defunct

companies such as Sun Microsystems, Silicon

Graphics, Apollo Computer, DEC, HP, NeXT and IBM

which opened the door for the 3D graphics animation

revolution of the late 1990s.

Workstations offered higher performance than

mainstream personal computers, especially with

respect to CPU and graphics, memory capacity, and

multitasking capability. Workstations were

optimized for the visualization and manipulation

of different types of complex data such as 3D

mechanical design, engineering simulation (e.g.,

computational fluid dynamics), animation and

rendering of images, and mathematical plots.

Typically, the form factor is that of a desktop

computer, consist of a high-resolution display, a

keyboard and a mouse at a minimum, but also offer

multiple displays, graphics tablets, 3D mice

(devices for manipulating 3D objects and

navigating scenes), etc. Workstations were the

first segment of the computer market to present

advanced accessories and collaboration tools.

The increasing capabilities of mainstream PCs in the

late 1990s have blurred the lines somewhat with

technical/scientific workstations. The

workstation market previously employed proprietary

hardware which made them distinct from PCs; for

instance, IBM used RISC-based CPUs for its

workstations and Intel x86 CPUs for its

business/consumer PCs during the 1990s and 2000s.

However, by the early 2000s this difference

disappeared, as workstations now use highly

commoditized hardware dominated by large PC vendors,

such as Dell, Hewlett-Packard (later HP Inc.) and

Fujitsu, selling Microsoft Windows or Linux systems

running on x86-64 architecture such as Intel Xeon or

AMD Opteron CPU Microcomputers 1980 to present. |

Microcomputers

|

The first microcomputer was the

Japanese Sord Computer Corporation's SMP80/08

(1972), which was followed by the SMP80/x (1974).

The French developers of the Micral N (1973) filed

their patents with the term "Micro-ordinateur", a

literal equivalent of "Microcomputer", to designate

a solid-state machine designed with a

microprocessor. In the USA, the earliest models such

as the Altair 8800 and Apple I were often sold as

kits to be assembled by the user, and came with as

little as 256 bytes of RAM, and no input/output

devices other than indicator lights and switches,

useful as a proof of concept to demonstrate what

such a simple device could do. However, as

microprocessors and semiconductor memory became less

expensive, microcomputers in turn grew cheaper and

easier to use.

All these improvements in cost and usability

resulted in an explosion in their popularity

during the late 1970s and early 1980s. A large

number of computer makers packaged microcomputers

for use in small business applications. By 1979,

many companies such as Cromemco, Processor

Technology, IMSAI, North Star Computers, Southwest

Technical Products Corporation, Ohio Scientific,

Altos Computer Systems, Morrow Designs and others

produced systems designed either for a resourceful

end user or consulting firm to deliver business

systems such as accounting, database management,

and word processing to small businesses. This

allowed businesses unable to afford leasing of a

minicomputer or time-sharing service the

opportunity to automate business functions,

without (usually) hiring a full-time staff to

operate the computers. A representative system of

this era would have used an S100 bus, an 8-bit

processor such as an Intel 8080 or Zilog Z80, and

either CP/M or MP/M operating system. The

increasing availability and power of desktop

computers for personal use attracted the attention

of more software developers. In time, and as the

industry matured, the market for personal

computers standardized around IBM PC compatibles

running DOS, and later Windows. Modern desktop

computers, video game consoles, laptops, tablet

PCs, and many types of handheld devices, including

mobile phones, pocket calculators, and industrial

embedded systems, may all be considered examples

of microcomputers according to the definition

given above.

The initial manufactures of microcomputers that

gained wide acceptance were Apple, Radio Shack,

Commodore, Osborne, and Kaypro. The later

manufactures of microcomputers were Apple, IBM,

Compaq, Dell Computer, Packard Bell, AST Research,

and Gateway 2000. Today there are literally dozens

of manufactures of microcomputers. |

Supercomputers

|

A supercomputer is a computer

with a high level of computing performance compared

to a general-purpose computer. Performance of a

supercomputer is measured in floating-point

operations per second (FLOPS) instead of million

instructions per second (MIPS). As of 2015, there

are supercomputers which can perform up to

quadrillions of FLOPS, measured in P(eta)FLOPS. The

majority of supercomputers today run Linux-based

operating systems.

Supercomputers play an important role in the

field of computational science, and are used for a

wide range of computationally intensive tasks in

various fields, including quantum mechanics,

weather forecasting, climate research, oil and gas

exploration, molecular modeling (computing the

structures and properties of chemical compounds,

biological macromolecules, polymers, and

crystals), and physical simulations (such as

simulations of the early moments of the universe,

airplane and spacecraft aerodynamics, the

detonation of nuclear weapons, and nuclear

fusion). Throughout their history, they have been

essential in the field of cryptanalysis.

Supercomputers were introduced in the 1960s, and for

several decades the fastest were made by Seymour

Cray at Control Data Corporation (CDC), Cray

Research and subsequent companies bearing his name

or monogram. The first such machines were highly

tuned conventional designs that ran faster than

their more general-purpose contemporaries. Through

the 1960s, they began to add increasing amounts of

parallelism with one to four processors being

typical. From the 1970s, the vector computing

concept with specialized math units operating on

large arrays of data came to dominate. A notable

example is the highly successful Cray-1 of 1976.

Vector computers remained the dominant design into

the 1990s. From then until today, massively parallel

supercomputers with tens of thousands of

off-the-shelf processors became the norm. |

The computer had a tremendous impact on engineering, as

it resolved all the issues of the slide ruler, mechanical

calculator, and the electronic calculator. In addition, it

had a full array of mathematical operations, with much

greater precision, and was fully programmable for complex

calculations. As computers became faster, smaller, and

less expensive they were integrated into engineering at

all levels. They even spawned the development of Computer

Aided Design (CAD), Computer Aided Engineering (CAE), and

Computer Aided Manufacturing (CAM) software applications.

The subject of Computer Modeling and its Issues, Concerns,

and Limitations is another paper that I have written and

is beyond the scope of this paper. I would direct you to

this paper “Computer Modeling” for further information.

Below is the recap of this paper.

Conclusions

Mathematical Tools are like any other tool - they can be

used properly or improperly, by a skilled or unskilled

persons, and even if used properly in the hands of a

skilled person they can produce improper or incorrect

results. When used in the hands of of a biased person they

can give the false illusion of correctness and believably

of the results, as I have examined in another article of

mine, Oh What a Tangled Web We Weave.

Consequently, we should all be wary of Mathematical Tools

results, and verify to the best of our ability their

correctness before we proceed with the results. We should

also remember that there are things that we know, things

that we know we don't know, and things that we don't know

that we don't know—and it is the things that we don't know

that we don't know where the serious problems often arise.

Disclaimer

Please Note - many academics, scientist

and engineers would critique what I have written here as

not accurate nor through. I freely acknowledge that these

critiques are correct. It was not my intentions to be

accurate or through, as I am not qualified to give an

accurate nor through description. My intention was to be

understandable to a layperson so that they can grasp the

concepts. Academics, scientists, and engineers entire

education and training is based on accuracy and

thoroughness, and as such, they strive for this accuracy

and thoroughness. I believe it is essential for all

laypersons to grasp the concepts of this paper, so they

make more informed decisions on those areas of human

endeavors that deal with this subject. As such, I did not

strive for accuracy and thoroughness, only

understandability.

Most academics, scientist, and engineers when speaking or

writing for the general public (and many science writers

as well) strive to be understandable to the general

public. However, they often fall short on the

understandability because of their commitment to accuracy

and thoroughness, as well as some audience awareness

factors. Their two biggest problems are accuracy and the

audience knowledge of the topic.

Accuracy is a problem because academics, scientist,

engineers and science writers are loath to be inaccurate.

This is because they want the audience to obtain the

correct information, and the possible negative

repercussions amongst their colleagues and the scientific

community at large if they are inaccurate. However,

because modern science is complex this accuracy can, and

often, leads to confusion amongst the audience.

The audience knowledge of the topic is important as most

modern science is complex, with its own words,

terminology, and basic concepts the audience is unfamiliar

with, or they misinterpret. The audience becomes confused

(even while smiling and lauding the academics, scientists,

engineers or science writer), and the audience does not

achieve understandability. Many times, the academics,

scientists, engineers or science writer utilizes the

scientific disciplines own words, terminology, and basic

concepts without realizing the audience

misinterpretations, or has no comprehension of these

items.

It is for this reason that I place understandability as

the highest priority in my writing, and I am willing to

sacrifice accuracy and thoroughness to achieve

understandability. There are many books, websites, and

videos available that are more accurate and through. The

subchapter on “Further Readings” also contains books on

various subjects that can provide more accurate and

thorough information. I leave it to the reader to decide

if they want more accurate or through information and to

seek out these books, websites, and videos for this

information.

© 2025. All rights

reserved.

If you have any comments, concerns, critiques, or

suggestions I can be reached at mwd@profitpages.com.

I will review reasoned and intellectual correspondence,

and it is possible that I can change my mind,

or at least update the content of this article. |