The Personal Website of Mark W. Dawson

Containing His

Articles, Observations, Thoughts, Meanderings,

and some would say Wisdom (and some would say not).

Climate Change

- Introduction

- Background

- Climate Change Overview

- Natural Cycle of Global Climate Change

- Systemic Problems

- The Limits of Human Knowledge

- The Carbon Dioxide Issue

- The Pause

- Statistical and Probability Methods Issues

- Hard Data vs. Soft Data

- Data Mining, Data Massaging and Data Quality

- Computer Modeling Issues, Concerns & Limitations

- Open and Closed Systems

- GIGO (Garbage In Garbage Out)

- Chaos, Complexity, and Network Science

- Showoffs

- The 97 Percent Solution

- Final Thoughts

- Further Readings

- Disclaimer

Introduction

I believe in climate change. I believe the climate has changed in the past, the climate is currently changing, and the climate will change in the future. This is a meteorological and geological scientific fact. The question is whether human activity is causing the current climate change. This may be true, or may not be true, depending upon your interpretation of scientific facts and beliefs. If you had read my observation on the "On the Nature of Scientific Inquiry" you know that I have a scientific orientation to my thinking, and in this article I apply that scientific thinking too many of the issues and concerns of climate change.

I should point out that I am NOT a scientist or engineer, nor have I received any education or training in science or engineering. This paper is the result of my readings on this subject in the past decades. Many academics, scientists, and engineers would critique what I have written here as not accurate nor through. I freely acknowledge that these critiques are correct. It was not my intentions to be accurate or through, as I am not qualified to give an accurate nor through description. My intention was to be understandable to a layperson so that they can grasp the concepts. Academics, scientists and engineers' entire education and training is based on accuracy and thoroughness, and as such, they strive for this accuracy and thoroughness. When writing for the general public this accuracy and thoroughness can often lead to less understandability. I believe it is essential for all laypersons to grasp the concepts of within this paper, so they make more informed decisions on those areas of human endeavors that deal with this subject. As such, I did not strive for accuracy and thoroughness, only understandability.

Background

To examine Climate Change, we must first look at the geological and meteorological history of the Earth. When the Earth was first formed it was molten and volcanic. It cooled and simply became hot. It cooled even further, and then warmed, and at times in our Earth's geological history the earth's climate varied tremendously. At different times the earth was covered in ice, at other times it had a tropical environment and was covered with lush vegetation and animal life, and the climate has been in all states in between. This article examines these topics, and others, to highlight some of the issues and concerns on Climate Change science.

Climate Change Overview

In the recent geological history (the last 10,000 years) we have exited an ice age and entered into a more temperate climate. During this time the earth has been cooler and been warmer, varying as much as 3' or 4' Fahrenheit (approximately 1' Celsius). Until the 20th century, no one would say that human activity has been the cause of this variance. The largest human activity that could have affected the climate is in deforestation and/or the burning of forests. Even when this happened the Earth's climate did not change significantly.

It is only in the 20th century that climate change proponents have declared that human activity is affecting the climate. Even during the 20th century, we have had periods where the Earth's climate has been cooler or warmer by about 1' Fahrenheit. During the 20th century, the climate change has been generally an increase in temperature. The real question is - is that increase outside the normal range of what you should expect given the short time frame (100 years) in which you are measuring? And is human activity the cause of this change?

In this paper, I wish to point out the Natural Climate Cycles and Systemic Problems with current Climate Models. Rather than go into the specific science or scientific issues I will be examining the general issues, concerns, and limitations of Climate Modeling. The two main issues are; 1) Natural Climate Cycles and 2) Systemic Problems in Climate Modeling.

Natural Cycle of Global Climate Change

The Earth's Climate has a Natural Cycle that must be accounted for in any Climate Model. The OSS website has a good overview of this natural cycle, and I would encourage you to visit their website for more information.

|

It is important to understand the natural cycles of the Earth's climate change in order to determine if we are in a natural cycle, or if something else is happening. For this reason, you should really visit this website. However, I will provide my perspective based on the following charts.

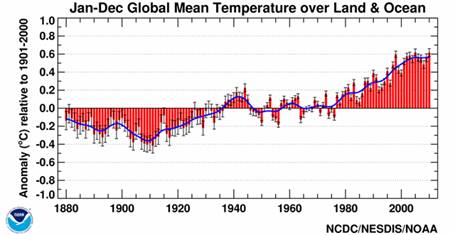

The following chart demonstrates the current concern about Climate Change.

Just looking at this chart would leave you to believe that we have a serious climate change problem. In the past 40 years, we have seen a rise in the temperatures over the entire globe. But there is another way to view this chart. For the first sixty years of this chart, the temperatures dropped then rose. For the next forty years, the temperature varied slightly up or down. It is only for the last 40 years that temperature has risen. The first 60 years showed a temperature variation of approximately 0.40 centigrade, while the middle forty years showed a variation of about 0.150 centigrade. Over the last forty years, there has been a 0.60 centigrade change. And considering that the first 60 years the temperature when down than up perhaps the current upward temperatures of the last forty years will drop in the next 20 years. This could be part of a natural cycle, but it could also be an indication of a climate change problem. To gain a better perspective you need to look at a longer period of geological time. After all, 140 years is a very short time frame when dealing with geological phenomena.

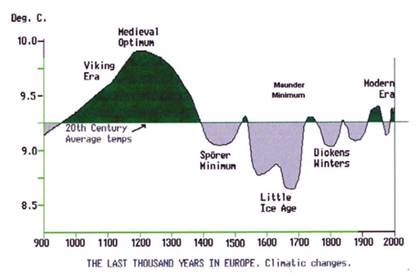

The next chart provides a better perspective over a longer geological time frame of 1,000 years.

Temperatures in Europe plotted against the 20th-century average. (Source: Based on diagram in 2nd assessment report IPCC 1995/96.)

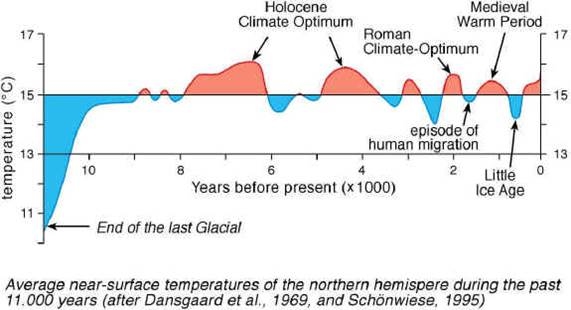

As can be seen from this perspective our current climate change fall well within other climate change variations of the past 1000 years. Looking at the next chart provides a 10,000-year perspective. Again, our current climate change falls within the variations of the last 10,000 years.

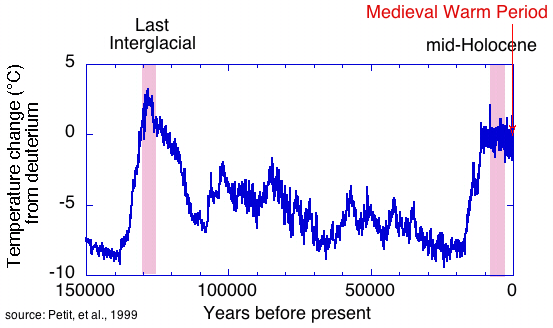

If you look back over 150,000 years you get another perspective.

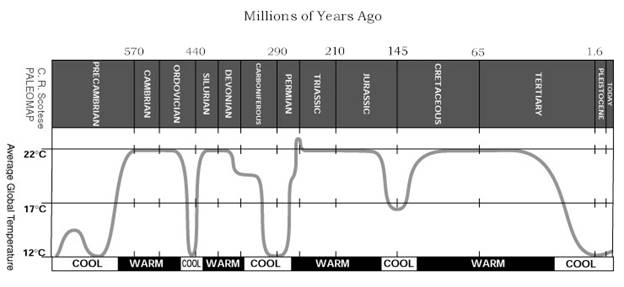

Looking at a chart of the geological ages gives another perspective.

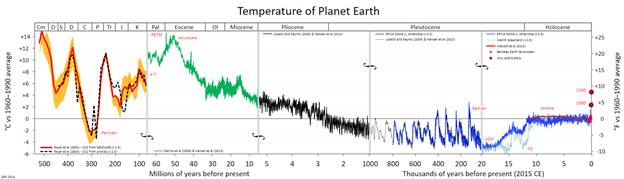

And finally, looking back even further provides more perspective.

The purpose of reviewing these charts is to not debunk climate change proponents but to demonstrate the importance of understanding natural climate change cycles. You must understand natural climate change cycles to determine if something unusual is currently occurring. You must have a climate change model that explains at least the last 10,00 years of climate change to determine what is presently happening. If you have a good climate change model that explains the last 10,000 years you can extract natural cycles and examine what may be unnatural. However, what is unnatural does not necessarily mean human activity is the cause. It may be human activity, or it may be because of unknown factors. This is where science can step in to determine what are the unnatural factors that could be contributing to climate change. But until you can extract the natural cycle you cannot determine if anything unnatural is occurring.

Systemic Problems

The Limits of Human Knowledge

The first thing to keep in mind when dealing with any scientific or engineering subject is that it is very important to remember three things about the limitations of human knowledge:

- That we know what we know, and we need to be sure that what we know is correct.

- That we know what we don't know, and that allowances are made for what we don't know.

- That we don't know that we don't know, which cannot be allowed for as it is totally unknown.

The limits of human knowledge are expanding, but there is much more that we don't know then there is what we do know. Indeed, even when we know what we know, what we know may be incorrect. What we know that that we don't know always leads to ambiguity, mistakes and false conclusions. That which we don't know that we don't know is the killer in any scientific or engineering endeavor. Always be cognizant of these three items when dealing with any scientific or engineering subject.

There are several big science issues that impact the veracity of a Climate Computer Model regarding the limitations of human knowledge. I will not be discussing the science of these issues but instead focusing on their impacts on Climate Models.

Earthly Issues

There are three Earthly issues that need to be resolved for Climate Models to be veracious. They are:

Volcanic Activity

Volcanic eruptions are very important to climate change. The location, intensity, the amount of particulate material ejected into the atmosphere, and the heights the particulate material reach all have a large impact on the global climate. Minor volcanic eruptions have localized effects, while major volcanic eruption has a global effect. This is not to mention Super Volcanic Eruptions which are an Extinction Level Event. Yet scientists cannot predict when, where, intensity, and the particulate matter factors of a volcanic eruption. Climatologists utilize statistical methods in their Climate Models to predict volcanic events, but statistical methods can be very suspect (see 'Statistical and Probability Methods Note' below).

Earthquakes

Earthquakes also have a large impact on climate change. The location, intensity, and other factors have a large impact on climate (both local and global). There is also some evidence that climate change can trigger an earthquake. The question then becomes what came first, the chicken or the egg. Probably both answers are correct in that sometimes earthquakes impact the climate and sometimes the climate trigger an earthquake, and they feed back into each other. Again, Climatologists utilize statistical methods to predict the probability of an earthquake, but statistical methods can be very suspect (see 'Statistical and Probability Methods Note' below).

Cloud Formation

Cloud formation is extremely important in Climate Modeling. The type, number, composition, heights, locations, and other factors regarding clouds must be correct to properly create a Climate Model. While scientists know quite a bit about cloud formation there is one key question that is somewhat uncertain. This key question is what starts the formation of a cloud, or more scientifically what is the trigger mechanism that starts cloud formation. There have been a few hypotheses have been put forward on this question, but there is no settled science on the answer to this question. In addition, the actual mechanism that triggers the cloud formation tends to be nebulous (see the Sidney Harris cartoon below). One respected scientist, Henrik Svensmark, has put forward a more definitive hypothesis, that if correct has serious consequences to climate modeling. This scientist has spent over two decades studying this issue and gathering statistical, and now finally experimental evidence to back his hypothesis. Briefly, his hypothesis is that a gamma ray coming from outer space strikes a particle in the atmosphere and imparts a charge to the particle, which attracts other particles to it, which is the trigger for cloud formation. While this is only a hypothesis, if it is true it has a significant impact on climate modeling. As gamma rays are entirely random in number, location, and intensity, and the striking of a particle in the Earth's atmosphere by a gamma ray is entirely random, there is no way to computer model them other than by statistical means (see 'Statistical and Problematical Note' below). It is also known that the Earth, and more importantly the Sun's magnetic field deflect gamma rays. As the Earth's and the Sun's magnetic field can vary in intensity when this variation occurs more or less gamma rays strike the Earth. This variation of the magnetic fields is apparently random, or of an unknown cyclic manner, and therefore it cannot be Climate Modeled. His hypothesis needs to be thoroughly examined and proven or disproven by other scientists. If his hypothesis is true it would render any climate model highly unpredictable. In any case, the trigger mechanism for cloud formation need to be better explained by scientists and utilized in the Climate Models.

Heavenly Issues

There are Heavenly issues that need to be resolved for Climate Models to be veracious. They are:

Solar Activity Cycles

The energy from the Sun is the major driver of climate, with the other driver being geothermal activity, but geothermal activity only plays a minor role in comparison to solar energy. A very small increase or decrease in the amount of solar energy reaching the Earth will have a major impact on the climate. Indeed, less than 100th of a 1% change in solar energy levels has a major Impact on the climate. As the Sun is generally stable it has variations of its energy output. Until recently it was not possible to measure these small amounts of solar energy variability. Recently NASA has orbited satellites around the Sun that are attempting to measure this solar energy variability on a small scale. The information obtained so far is insufficient to be utilized for climate modeling. But for an accurate climate model, we need to incorporate the variability of solar activity.

The Eccentricity of Earth's Orbit Around the Sun

Changes in orbital eccentricity affect the Earth-sun distance, impacting the amount of Solar radiation (heat) that strikes Earth. The shape of the Earth's orbit changes from being elliptical (high eccentricity) to being nearly circular (low eccentricity) in a cycle that takes between 90,000 and 100,000 years. When the Earth's orbit is highly elliptical, the amount of solar radiation received at the closest point to the Sun would be on the order of 20 to 30 percent greater than at the farthest point from the Sun, resulting in a substantially different climate from what we experience today.

Obliquity (change in axial tilt) of Earth

As the axial tilt increases, the seasonal contrast increases so that winters are colder and summers are warmer in both hemispheres. Today, the Earth's axis is tilted 23.5 degrees from the plane of its orbit around the sun. But this tilt changes. During a cycle that averages about 40,000 years, the tilt of the axis varies between 22.1 and 24.5 degrees. Because this tilt changes, the seasons as we know them can become exaggerated. More tilt means more severe seasons'warmer summers and colder winters; less tilt means less severe seasons'cooler summers and milder winters.

Galactic Motion

Another Heavenly issue is the fact that our sun revolves around the center of our galaxy. One revolution takes approximately 270 million years, but during that time the sun moves through lesser or more dense areas of Galactic dust. The question is does this dust density impact the amount of solar energy that reaches the Earth. In a denser dust region, we can expect that some of the solar energy is reflected and does not reach the Earth, while in a lesser dense area more solar energy would reach the Earth. How much of an impact this has is totally unknown. It may also be totally unknowable as we have no way of determining the density of the galactic dust around the Sun, nor the density of the dust in the path of the Sun's revolution around the Galaxy. It should be noted that the impact of this movement can take tens, if not hundreds of thousands of years to have an impact. However, the question is are we on a boundary line of lesser or greater dust concentrations and is this boundary an abrupt or gradual change. We know that the Sun is moving into a small galactic arm of the Milky Way Galaxy, so we should expect more dusty regions will be encountered.

While Heavenly Issues impact the global climate over tens of thousands of years, they do have an impact. The magnitude of these Heavenly Issues impacts is currently unknown, nor is the timing of these impacts. Consequently, it is not possible to climate model these impacts.

Both the Earthly and Heavenly Issues point out the limitations of human knowledge. Given these and other limitations of climate models, they can be suspect even if they try to account for these unknowns and limitations.

The Carbon Dioxide Issue

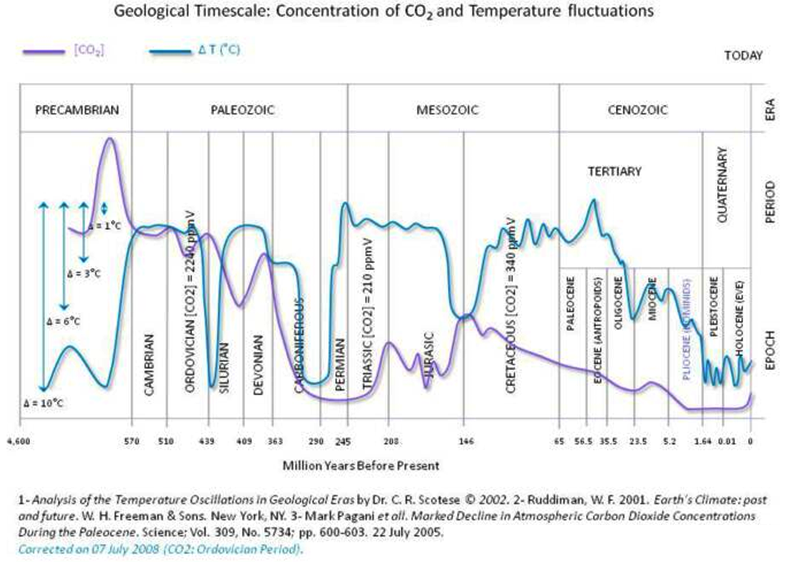

Much has been written, and miss-written, on the the effect of Carbon Dioxide on the climate. We have good (but not great) scientific evidence on the concentration of Carbon Dioxide and Temperature through the geological ages. The following chart graphs this relationship:

As can be seen from this chart there is no apparent correlation or causation in this relationship (see my Observation on "Oh What a Tangled Web We Weave" for more information on statistics). Therefore, I am highly skeptical of significant impacts of Carbon Dioxide on the climate. It should also be remembered that Carbon Dioxide is essential to the life cycle on the Earth and more Carbon Dioxide can be beneficial to agriculture. I would suggest that we be very careful in trying to reduce or regulate Carbon Dioxide as this could have unanticipated and negative consequences.

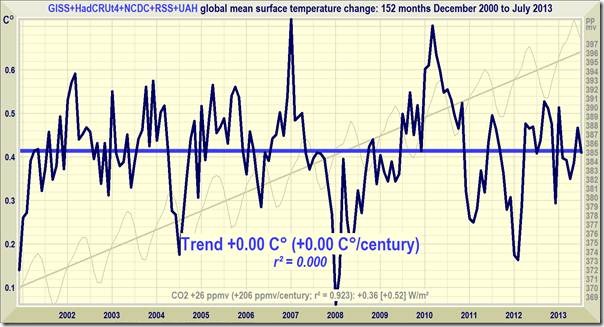

The Pause

On of the most disconcerting aspects of Climate Models is their predictability as discussed in the "Computer Modeling Issues, Concerns & Limitations: section below, as well as my Observation on "Predictability & Falsifiability in Scientific Theories". Not only has their predictability been suspect, but it appears that we may be in a warming pause as illuminated in the chart below:

If we are indeed in a warming pause the question is the duration of this pause, the causes of this pause, and its impacts on the longer term effects on climate change. Also, this apparent pause was not predicted in any Climate Model which calls into question the veracity of climate models.

Statistical and Probability Methods Issues

Climatologists often utilize statistical and probability methods in their climatology computer models and simulations. However, when this happens statistical and probability methods are often educated guesstimates. If the algorithms and data are incorrect the climatology computer models or simulations will be incorrect. In addition, the more you actually know the more reliable your climatology model or simulation. This infers the less you know, and the more you rely on statistical and probability models, the less reliable is your climatology model or simulation. However, it is not possible to know everything, so you must resort to statistical and probability methods. This involves utilizing Data Mining, Data Massaging, and Data Quality techniques discussed in the next section. When you do this, however, you must reveal the algorithms, raw data, and the data mining, massaging, and quality controls that you have utilized. This is necessary so that other scientist and mathematicians can examine what has been done to verify the veracity of what was done. If this is not revealed or obscured the climatology model or simulation is highly suspect. Unfortunately, far too often this information is not fully revealed in climatology research. You must also remember the following famous quote about statistics:

If you torture the data long enough, it will confess to anything. - from Darrell Huff's book "How to Lie With Statistics" (1954).

And always remember the following humorous cartoon of Sidney Harris:

For more information on statistical and probability methods in climatology computer models and simulations I would recommend the following websites:

For more information on the

facts of Climate Models I would suggest that you visit the

RealClimate website on: FAQ

on climate models.

|

|

Another skeptic of the use of statistics in Climate Change is Tony Heller. He is not a climate scientist. (Neither is Al Gore or Bill Nye, the Science Guy.) Heller is a Computer Scientist and Geologist who enjoys digging into data. He has a website, realclimatescience.com, which examine the use, and misuse, of statistics in Climate Change. This website is well worth reviewing, and his YouTube video "My Gift to Climate Alarmists" is well worth the watch. In this video he demonstrates just how charts are manipulated by the climate alarmists. |

Hard Data vs. Soft Data

|

Hard data - is a verifiable fact that is acquired from reliable sources according to a robust methodology. Soft data - is data based on qualitative information such as a rating, survey or poll. The Difference - Hard data implies data that is directly measurable, factual and indisputable. Soft data implies data that has been collected from qualitative observations and quantified. This doesn't mean that such data is unreliable. In many cases, the best data available is soft data. It is common to base business decisions on soft data such as customer satisfaction and product reviews. |

Hard data is the foundation of Science. Without hard data the scientific results may be questionable (but not always). Even with hard data there are other problems with data utilization in science. Some of these problems are as follows.

Data Mining, Data Massaging and Data Quality

Data Mining

Data Mining is the process of discovering patterns in large data sets involving methods at the intersection of machine learning, statistics, and database systems. Data mining is an interdisciplinary subfield of computer science with an overall goal to extract information (with intelligent methods) from a data set and transform the information into a comprehensible structure for further use. Data mining is the analysis step of the "knowledge discovery in databases" process, or KDD. Aside from the raw analysis step, it also involves database and data management aspects, data pre-processing, model and inference considerations, interestingness metrics, complexity considerations, post-processing of discovered structures, visualization, and online updating. The term "data mining" is in fact a misnomer, because the goal is the extraction of patterns and knowledge from large amounts of data, not the extraction (mining) of data itself. It also is a buzzword and is frequently applied to any form of large-scale data or information processing (collection, extraction, warehousing, analysis, and statistics) as well as any application of computer decision support system, including artificial intelligence (e.g., machine learning) and business intelligence. The book Data mining: Practical machine learning tools and techniques with Java (which covers mostly machine learning material) was originally to be named just Practical machine learning, and the term data mining was only added for marketing reasons. Often the more general terms (large scale) data analysis and analytics ' or, when referring to actual methods, artificial intelligence and machine learning ' are more appropriate. The actual data mining task is the semi-automatic or automatic analysis of large quantities of data to extract previously unknown, interesting patterns such as groups of data records (cluster analysis), unusual records (anomaly detection), and dependencies (association rule mining, sequential pattern mining). This usually involves using database techniques such as spatial indices. These patterns can then be seen as a kind of summary of the input data, and may be used in further analysis or, for example, in machine learning and predictive analytics. For example, the data mining step might identify multiple groups in the data, which can then be used to obtain more accurate prediction results by a decision support system. Neither the data collection, data preparation, nor result interpretation and reporting is part of the data mining step, but do belong to the overall KDD process as additional steps.

Data Mining in Climate Science is often utilized to aggregate data from varying and disparate sources for use in the climate computer models. Data mining is not an easy task, as the algorithms used can get very complex and data is not always available at one place. It needs to be integrated from various heterogeneous data sources. These factors also create some issues. Although Data Mining is very important and helpful you need to very careful when utilizing Data Mining. If done incorrectly or improperly you will encounter the problem of Garbage In (see below) into your computer model.

Data Massaging

Data massaging (sometimes referred too as "Data Adjustments" or "Corrected Data") is a term for cleaning up data that is poorly formatted or missing required data for a particular purpose. It also refers to adjusting data that scientists believed were impacted by external circumstances (usually equipment or location) unrelated to the "real" results. The term implies manual processing or highly specific queries to target data that is breaking or limiting an automated process or analysis. The term is also informally used to indicate outliers in data were dropped because they were interfering with visual presentation or confirmation of a particular theory. As such, data massage has potential ethical, compliance and risk implications.

|

One of the joys of research is feeding a mass of data into a computer program, pushing the return button, and seeing a graph magically appear. A straight line! That ought to give some nice kinetic data. But on second glance the plot is not quite satisfactory. There are some annoying outlying points that skew the rate constant away from where it ought to be. No problem. This is just a plot of the raw data. Time to clean it up. Let's exclude all data that falls outside the three-sigma range. There, that helped. Tightened that error bar and moved the constant closer to where it should be. Let's try a two-sigma filter. Even better! Now that's some data that's publishable. You have just engaged in the venerable practice of data massaging. A common practice, but should it be? Every scientist will agree that you should not choose data'selecting data that supports your argument and ignoring data that does not. But even here there are some grey areas. Not every reaction system gives clean kinetics. Is there anything wrong with studying a system that can be analyzed, rather than beating your head against the wall of an intractable system? Gregor Mendel didn't think so. In his studies of plant heredity, he did not randomly sample data from every plant in his garden. He found that some plants gave easily analyzed data while others did not. Naturally, he studied those that gave results that made sense. But among those systems he studied, he did not pick and choose his data. Even some of the best scientists will apply what they consider rigorous statistical filters to improve the data, to clean it up, to tighten the error bars. Is this acceptable? Some statisticians say it is not. They argue that no data should be excluded on the basis of statistics. Statistics may point out which data should be further scrutinized but no data should be excluded on the basis of statistics. I agree with this point of view. When you 'improve' data, you exclude data. Should not all the data be available to the public? If there is a wide spread in the data, is not that fact in itself a valuable piece of information? A reader ought to know how reliable the data is and not have to guess how good it was before the two-sigma filter was applied. Is data massaging unethical? Not if you clearly state what you have done. But the practice is unwise and ought to be discouraged. |

Data Massaging in Climate Science modeling is often utilized. Although Data Massaging can be helpful you need to very careful when utilizing Data Massaging. If done incorrectly or improperly you will encounter the problem of Garbage In (see below) into your computer model. Much of the current controversy on Climate Change centers around "Data Massaging, "Data Adjustments", or "Corrected Data". Many skeptics of Anthropic (man-made) Climate Change are very leery of these adjustments as they always tend to show increased Anthropic Climate Change. The Anthropic Climate Change skeptics also believe that some data is being adjusted that should not be adjusted (such as satellite data which has a higher accuracy than other data types). All persons should be cautious and wary of Data Massaging, as if it is done incorrectly or improperly it will lead to incorrect results.

Data Quality

One of the problems for Climate Change studies and Climate Modeling is obtaining useful data. Hard data (actual measurements) have only been accurate for the latter half of the 20th century, and they often lack the precision required for the computer modeling. Data from the 21st century has been more precise and therefore more useful for the computer models, but there is insufficient data for accurate Climate Modeling. Prior to the latter half of the 20th century, it becomes necessary to utilize soft data (extrapolated data). For instance, we need to know the temperature of land masses, atmosphere, and oceans for the past several thousand years over various parts of the Earth. As there were no meteorological stations recording this information we must extrapolate it from such things as ice core samples, tree ring growths, sediment deposits, etc... This extrapolation process has its own issues, concerns, and limitations, and depending on what and how you extrapolated the data it must be massaged to make it useful. Therefore, this data must be carefully and correctly massaged to be useful. Even after it is carefully and correctly massaged it is suspect and has margins of errors, that impact the Climate Model. This, unfortunately, leads to GIGO as explained later in this article.

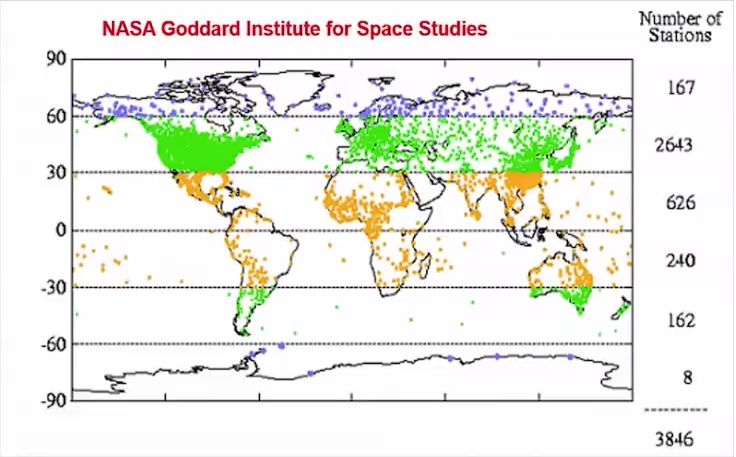

The data quality is not only determine by the accuracy of the measurement but also the locations of the measurements. The more diverse and distributed the locations for the measurements the more usefulness of the measurements. Prior to the 21st century most of these measurement were at ground stations, with a few being from satellites. In the 21st century many more measurements are being collected from satellites which have a higher quality of measurement and more diverse measure locations (they can measure any point on the Earth that they are pointed at). The following chart shows the distribution of the ground stations locations.

As can be seen from the above chart the distribution of ground stations is not diverse which can lead to possible skewing of the data. The Climate Scientists try to compensate for this skewing by applying Data Mining and Data Modeling techniques. However, as previously noted these techniques have their own issues and concerns. It would be better for Climate Science if there were more satellite data and more reliance on satellite data.

Computer Modeling Issues, Concerns & Limitations

Most scientific and engineering endeavors utilize Computer Modeling. Therefore, you need to know the issues, concerns, and limitations of Computer Modeling to determine its impact on the scientific and engineering endeavors. Computer Modeling is another paper I have written that examine the issues, concerns, and limitations of Computer Modeling. I would direct you to this paper to better understand Computer Modeling. However, the conclusions of this paper are as follows and you should always remember:

Computer Models are great tools for helping you think,' just never rely on them to do the thinking for you.

Computer modeling has at its core three levels of difficulty ' Simple Modeling, Complex Modeling, and Dynamic Modeling. Simple modeling is when you are working on a model that has a limited function; a few (hundreds or maybe a thousand) of constants and variables within the components of the model, and a dozen or so interactions between the components of the model. Complex modeling occurs when you incorporate many simple models (subsystems) together to form a whole system, or where there are complex interactions and/or feedback within and between the components of the computer model. Not only must the subsystems be working properly, but the interactions between the subsystems must be modeled properly. Dynamic modeling occurs when you have subsystems of complex modeling working together, or when external factors that are complex and varying are incorporated into the computer model.

The base problem with computer modeling is twofold; 1) verification, validation, and confirmation, and 2) correlation vs. causation. Verification and validation of numerical models of natural systems are impossible. This is because natural systems are never closed and because model results are always nonunique. The other issue of correlation vs. causation is how can you know that the results of your computer model are reflecting the actual cause (causation), or are they merely appearing to be the cause (correlation). To determine this, you need to mathematically prove that your computer model is scientifically correct, which may be impossible.

Therefore, all computer models are wrong ' but many of them are useful! The first thing to keep in mind when dealing with computer models is that when thinking about a computer model it is very important to remember three things:

- That we know what we know, and we need to be sure that what we know is correct.

- That we know what we don't know, and that allowances are made for what we don't know.

- That we don't know that we don't know, which cannot be allowed for as it is totally unknown.

It is numbers 2 and 3 that often is the killer in computer modeling and often leads to incorrect computer models.

You also need to keep in mind the other factors when utilizing computer models:

- Constants and Variables within a component of the computer model are often imprecisely known leading to incorrect results within the components, which then get propagated throughout the computer model.

- The interactions between the components are often not fully understood and allowed for, and therefore not computer modeled correctly.

- The feedback and/or dynamics within the computer model is imprecise, or not fully known, which leads to an incorrect computer model.

All these factors will result in the computer model being wrong. And as always remember GIGPGO (Garbage In -> Garbage Processing -> Garbage Out).

You should also be aware that when computer modeling is utilized to model for a long period of time the longer the time period modeled the more inaccurate the computer model will become. This is because the dynamics and feedback errors within the computer model build up which affects the long-term accuracy of the computer model. Therefore, long-term predictions of a computer model are highly suspect. Another thing to be aware of is that if you are computer modeling for a shorter period of time it needs to be of a sufficient period of time to determine the full effects of the computer model or to provide results that are truly useful. Too short of a time period will provide inconclusive (or wrong) results to be practicable. Therefore, short-term predictions of a computer model can be suspect. And finally, Chaos, Complexity, and Network Science have an impact on computer models and cannot be properly accounted for, which affects the accuracy of the computer model.

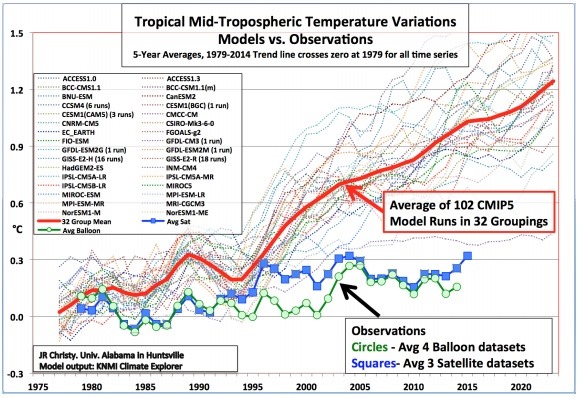

When the Statistical and Probability Methods Issues and the Computer Modeling Issues, Concerns & Limitations come together you can have some significant discrepancies as seen from the following chart.

Climate is a chaotic system with '

- A small amount of knowns,

- A large amount of maybes,

- An un-known amount of un-known's.

How do you computer model something like that accurately?

The lesson from this issue is that a computer model is not an answer but a tool ' and don't trust the computer model but utilize the computer model. The computer modeling system itself may contain errors in its programming. The information that goes into the computer model may be incorrect or imprecise, or the interactions between the components may not be known or knowable. And there may simply be too many real-world constants and variables to be computer modeled. Use the computer model as a tool and not an answer, and above all use your common sense when evaluating the computer model. If something in the computer model is suspicious examine it until you understand what is happening.

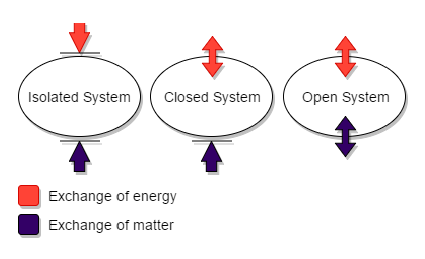

Open and Closed Systems

Computer modeling has at its core three levels of difficulty ' Simple Modeling, Complex Modeling, and Dynamic Modeling. This is usually based on whether the computer model is of an open or closed system.

Isolated, Open, and Closed Systems

In physical science, an Isolated System is either of the following:

- a physical system so far removed from other systems that it does not interact with them.

- a thermodynamic system enclosed by rigid immovable walls through which neither mass nor energy can pass.

A Closed System is a physical system that does not allow certain types of transfers (such as transfer of mass and energy transfer) in or out of the system. The specification of what types of transfers are excluded varies in the closed systems of physics, chemistry or engineering.

An Open System is a system that has external interactions. Such interactions can take the form of information, energy, or material transfers into or out of the system boundary, depending on the discipline which defines the concept. An open system is contrasted with the concept of an isolated system which exchanges neither energy, matter, nor information with its environment. An open system is also known as a constant volume system or a flow system.

The concept of an open system was formalized within a framework that enabled one to interrelate the theory of the organism, thermodynamics, and evolutionary theory. This concept was expanded upon with the advent of information theory and subsequently systems theory. Today the concept has its applications in the natural and social sciences.

In the natural sciences, an open system is one whose border is permeable to both energy and mass. In thermodynamics, a closed system, by contrast, is permeable to energy but not to matter.

Open systems have a number of consequences. A closed system contains limited energies. The definition of an open system assumes that there are supplies of energy that cannot be depleted; in practice, this energy is supplied from some source in the surrounding environment, which can be treated as infinite for the purposes of the study. One type of open system is the radiant energy system, which receives its energy from solar radiation ' an energy source that can be regarded as inexhaustible for all practical purposes.

Closed Systems are easier and more accurate to computer model but are less reliable in the real-world (an open system). Open Systems are much more difficult to computer model and are less accurate of the real-world as the inputs and outputs to the closed system are numerous, imprecise, and variable. Dynamic computer models tend to be of Open Systems, while simple computer models are usually of a closed system. Complex computer models tend to have subsystems of closed computer models while the entire system can be an open system or closed system.

GIGO (Garbage In Garbage Out)

At the dawn of the computer age there was an acronym that was frequently used; GIGO ' Garbage In -> Garbage Out. It referred to the situation that if your inputs were incorrect your outputs would be incorrect. It left unspoken that if your processing instructions (computer program) were incorrect anything in would produce incorrect outputs. The correct acronym should have been GIGPGO (Garbage In -> Garbage Processing -> Garbage Out), not pronounceable, and an admittance that computer programs contained mistakes. As computer programs became more complex GIGPGO became more pronounced. Today's computer programming is very complex, and the most complex programming is computer modeling. In computer modeling, the number of formulas (algorithm's) and the number of interrelationships between the algorithms are so complex that rarely is a computer model that is written is done by one person. Many computer models are produced by general computer modeling programming toolkits, which themselves are very complex. Computer modeling and computer modeling toolkits are usually written by a team of very knowledgeable and very experienced computer programmers. But team programming is fraught with problems, as team members are human, and humans make mistakes and miscommunicate, the results of which are imperfect computer modeling program. Many team computer programming efforts have extensive procedures, policies, and testing to reduce these potential mistakes, but mistakes will happen. In addition, the computer programmers are rarely Subject Matter Experts, and Subject Matter Experts are rarely (excellent) computer programmers. Therefore, GIGPGO is always present, and indeed it has been stated that all computer models are incorrect - but many are useful. GIGPGO must always be kept in mind when examining the results of a computer model as all computer modeling contain GIGPGO.

It should also be noted that fixing an error in a computer model may be easy or difficult. Fixing the consequence of utilizing an incorrect computer model is usually difficult, and the consequences can often lead to a disaster. Many of today's computer models are reliable but imperfect. When mistakes are uncovered they are corrected and learned from (every problem is a learning experience). The accumulation of learning experiences leads to better and more reliable computer models, but many more learning experiences are to be expected, and many improvements to computer modeling software will have to be undertaken.

As always, be sure to allow for GIGPGO (Garbage In, Garbage Processing, and Garbage Out) as well as the impacts of Data Massaging and Data Quality in your Climate computer model.

Chaos, Complexity, and Network Science

What are chaos, complexity, and network science, and what does it have to do with this subject. Directly Everything and Indirectly Everything! If you are going to create, develop or utilize scientific and engineering studies you need to be aware of chaos, complexity, and network science. You first need to understand something of chaos, complexity and network science to understand their impact on this subject. For this understanding, I have prepared another article 'Chaos, Complexity, and Network Science' that I would direct you too. Briefly, however, Chaos, Complexity, and Networks Science is as follows:

Chaos theory is the field of study in mathematics and science that studies the behavior and condition of dynamical systems that are highly sensitive to initial conditions, a response popularly referred to as the butterfly effect (Does the flap of a butterfly's wings in Brazil set off a tornado in Texas?). Small differences in initial conditions (such as those due to rounding errors in numerical computation) yield widely diverging outcomes for such dynamical systems, rendering long-term prediction impossible in general.

Complexity characterizes the behavior of a system or model whose components interact in multiple ways and follow local rules, meaning there are no reasonable means to define all the various possible interactions. Complexity arises because some systems are very sensitive to their starting conditions so that a tiny difference in their initial starting conditions can cause big differences in where they end up. And many systems have a feedback into themselves that affects their own behavior, which leads to more complexity.

Network science studies complex networks such as telecommunication networks, computer networks, biological networks, cognitive and semantic networks, and social networks. Distinct elements or actors represented by nodes, and the links between the nodes, define the network topology. A change in a node or link is propagated throughout the network and the Network Topology changes accordingly. Network science draws on theories and methods including graph theory from mathematics, statistical mechanics from physics, data mining and information visualization from computer science, inferential modeling from statistics, and social structure from sociology.

Showoffs

Knowing what is important, what is unimportant, and what is misleading when reviewing studies or statistics is crucial to discovering the truth. Unfortunately, statistics are often misused. Many times, this misuse is because people don't understand statistics and its limitations. Sometimes, however, statistics are misused to lead people astray, so that they make incorrect decisions or formulate an ill-founded opinion. Sometimes this is by accident, and sometimes it is deliberate. Always remember when someone is utilizing statistics that 'Figures Can Lie, and Liars Can Figure', and you should cautiously review any statistics. Some brief thoughts on how to be cautious and careful about statistics follow.

Studies and Statistics are often co-dependent and presented together. They are different, however, as studies are a methodology for examining an issue, reasoning, and organizing based on the facts uncovered and reaching a logical conclusion. Statistics are often utilized in this process, and indeed, they are often essential to the process. Statistics, however, are often generated without a study and can stand on their own. It is quite common for commentators to say, 'Studies Show' or 'Statistics Show', but you should never assume the studies or statistics are correct for the following reasons.

Studies Show

Studies can show anything. For every study that shows something, there is another's study that shows the opposite. This is because every study has an inherent bias of the person or persons conducting the study, or the person organization that commissioned the study. A very good person conducting the study recognizes their biases and compensates for them, to ensure that the study is as accurate as possible. Having been the recipient of many studies (and the author of a few) I can attest to this fact. Therefore, you should be very wary when a person says, "studies show". You should always investigate a study to determine who the authors are, who commissioned the study and to examine the study for any inherent biases.

Statistics Show

Everything that I said in "studies shows" also apply in statistics show. However, statistic show requires more elaboration, as it deals with the rigorous mathematical science of statistics. Statistics is a science that requires a very rigorous education and experience to get it right. The methodology of gathering data, processing the data, and analyzing the data is very intricate. Interpreting the results of the data accurately requires that you understand this methodology, and how it was applied to the statistics being interpreted. If you are not familiar with the science of statistics, and you did not carefully examine the statistics and how they were developed, you can often be led astray. Also, many statistics are published with a policy goal in mind, and therefore should be suspect. As a famous wag once said, "Figures can lie, and liars can figure". So be careful when someone presents you with statistics. Be wary of both the statistics and the statistician.

Reputation, Prestige, and Ego

The other factor is Reputation, Prestige, and Ego in Studies and Statistics. Every scientist and researcher desires to be recognized as an expert and leader in their field. Not only for reputation, prestige and ego purposes, but also for the grant or research monies and possible promotions or new position opportunities. A truly great scientist or researcher recognizes this and utilizes this motivation to produce better science or research and does not let it impact the actual science or research. Unfortunately, sometimes it does impact the science or research, and not only by the greats but also by the near greats and the common scientist or researcher. Hopefully, peer review weeds out these problems, but peer review has its own issues and concerns, and there have been too many studies and statistics that have passed peer review that were not worthy of passing.

Money, Money, Everywhere

Let's face it ' Climate Change has become big money, and not just by the scientists and researchers. Governments and their Agencies and Bureaucracies, Colleges and Universities, Research Institutes, Educational Institutes, Policy Institutes, Think Tanks, and now Big Business are all involved. Billions and billions of dollars are spent each year on all aspects of Climate Change. When these amounts of monies are being spent all should beware. Too many of these studies have axes to grind, and money to be made, that can be difficult to weed out the real science and research from the axes. And it is not only money nowadays but political, economic, and social power struggles that have become involved in Climate Change. When this much is at stake the real science and research may become secondary to these interests.

The 97 Percent Solution

A 2013 survey of Global Warming in the published scientific literature described its method and findings as follows:

'We analyzed the evolution of the scientific consensus an anthropogenic global warming (AGW) in the peer-reviewed scientific literature, examining 11,994 climate abstracts from 1991-2011 matching the topics 'global climate change' or 'global warming'. We find that 66.4% of abstracts expressed no position on AGW, 32.6% endorsed AGW, 0.7% rejected AGW and 0.3% were uncertain about the cause of global warming. Among abstracts expressing a position on AGW, 97.1% endorsed the consensus position that humans are causing global warming'.

It is from this last sentence that you often hear the claim the 97% of scientists (or climatologist) believe in human-caused global warming. However, this last sentence is misleading when taken out of context. The proper context is (in round numbers) 66% have no position, 33% endorse, and 1% reject man-made global warming. In science it is important that you report all the results - positive, negative, and null. By excluding the null results you are not providing the proper information to reach a sound conclusion. The 97% estimate of scientists (or climatologist) that believe in human-caused global warming is therefore unsound and misleading.

However, even the numbers that were reported can be misleading due to several hidden variables that it was not possible for the authors to ascertain. Practically all scientific literature goes through a peer-review process. If the peer-reviewer(s) have a cognitive bias in favor of man-made global warming they may have rejected the paper that they disagree with or not recognized its scientific worth (and this happens more often than you would think). Is the 1% rejection of man-made global warming a result because the papers that express this viewpoint were not published? Or was the rejection because of incorrect science or methodology in these papers? It is not possible for anyone to ascertain this without reviewing every paper on global warming submitted to a scientific journal. A Herculean task that no one would ever undertake given the sheer numbers of papers submitted to scientific journals.

Another question is the funding for global warming scientific research. If significantly more money is being spent to ascertain the effects of man-made global warming that significantly more papers will be submitted that show these effects. How much funding is directed for disproving man-made global warming? We would all like to believe that scientists are dispassionate and objective in their research, but scientist are human and have biases (especially when it impacts their egos or wallets).

Finally, there is the question of peer pressure. If your funding or grant monies, and/or your future employment or tenure is based on a superior who has an established position on global warming you are more likely to support the superiors' position. Sadly, it has also been the case that those scientists who disagree with man-made global warming have often been scolded, ridiculed, and even berated for their opinions. Some have even lost their positions or employment as a result of their not believing in man-made global warming. Who would speak-up (except those in secure positions) to question man-made global warming if it could have deleterious effects on your livelihood and career?

Other studies, statistics, and polling on the scientific consensus that support man-made global warming have many of these same inherent problems. Unfortunately, man-made global warming has become so economically delimited and politicize that it may be impossible to separate the science from the economics/politics of global warming.

I would caution all to be skeptical of claims of consensus or agreement when it comes to Climate Change, as well as many other areas of scientific research.

Final Thoughts

In any Climate Change model or predictions, the first thing that should be discussed are the following questions:

- Have the effects of the Natural Cycle of Global Climate Change been accounted for and extracted from your Climate Model to determine the impact of possible humans' contribution to climate change?

- Has your Climate Model been shown to be reasonably accurate for the 2000 years prior to the industrial revolution (i.e. before possible human contributions to climate change)?

- Has the computer models' software code been independently verified, in an open manner, to assure the veracity of the computer model (i.e. has your algorithms, constants, variables, Boolean algebra, interrelations, and feedback loops been demonstrated to be within scientific methods and computer modeling reasonability)?

- Have all the raw data, the data quality standards, the data mining techniques, and the data massaging techniques been revealed and independently verified to assure that the correct data is being utilized for the computer model?

- Have the sciences of Chaos, Complexity, And Networks been accounted for in your predictions?

The real question is - is that increase outside the normal range of what you should expect given the short time frame (100 years) in which you are measuring? And is human activity the cause of this change? The answer to that question is ' it is not known with any certainty. What concerns climate change proponents have been a steady increase within the normal parameters of the century of meteorological activity, and the possibility that this increase will continue, and we will have deal with serious effects upon the Earth's climate.

The proponents of climate change often use statistical analysis and computer modeling to show that this is going to happen. It should be remembered that statistical analysis and computer modeling are tools of science and not actual science. There is also the debate on the veracity of a Climate Model due to the Systemic Problems I have discussed. In my observation on the "On the Nature of Scientific Inquiry", you know that observation and experimentation, along with predictability and falsifiability are at the core of science. Both the proponents and opponents of human activity causing climate change are constantly debating predictability and falsifiability of the observations and experiments regarding climate change.

I, however, would like to comment on the predictability and falsifiability of the proponents of human-caused climate change. I know of no computer model for climate change has a high level of predictability, especially when you consider the entire history of the world. Even for the last 10,000, 1,000, or 100 years their predictability has been very low. All you have to do is go back 20 or 30 years and review the predictions they made for what today's climate would be. Even predictions made just 10 years ago have not been borne out by the facts of today's climate. As to their falsifiability, it seems whenever new observations or experiments do not fit within their model they often adjust the model to fit the new observations or experiments. Constantly revising your model is a scientific exercise that should be done, but it is also an indication that your model is incomplete, incorrect, or insufficiently comprehensive to be correct or scientific. Also, the constant readjustment of data and algorithms should be very carefully scrutinized. There is also some questions as to the veracity of some of the science data and scientific techniques utilized by Climate Change proponents. As a natural skeptic of statistics (see my article on "Oh What a Tangled Web We Weave") I believe that we need to be very careful before reaching a firm conclusion on the man-made impacts of Climate Change.

Constant questioning, and answering the questions, are a hallmark of good science. Scientists are always questioning even long established science. Even more than 100 years after Einstein formulated his General Theory of Relativity scientists still run experiments and make observations to determine the veracity of General Relativity. Scientists question the veracity of all observations and the experiments to determine the correctness of a hypothesis or theory. They also question the data and the formulas utilized in the hypothesis or theory. If the answers do not agree with the hypothesis or theory they question the correctness of the hypothesis or theory. Everything is open to questions, if the questions are scientifically based. Scientifically based questions must be scientifically answered. If the answers are unsatisfactory the hypothesis or theory may be inadequate. Tens of thousands of reputable scientists, including hundreds of Noble Prize winners, have publicly expressed their scientific questions and doubts about man-made Global Warming. These scientific questions need to be answered scientifically. To disparage the scientists asking the questions is not an acceptable scientific answer. Only scientific answers to a scientific question is acceptable (see note below).

I, therefore, am skeptical of the claims of human activity causing major climate change. I do believe that human activity can cause minor local climate change. I am, however, concerned that that it is possible that human activity could have a major impact on Climate Change. I believe much more scientific research, both pro, and con, must be done before we make large-scale changes to our economy in the hopes it will prevent possible major climate change caused by human activity. I also believe that technologically reasonable and economically feasible efforts should be implemented to reduce pollutants into our climate as a matter of good social policy.

And as always you should remember the wisdom of the Bard:

There are more things in heaven and earth, Horatio, Than are dreamt of in your philosophy. - Shakespeare's Hamlet - Hamlet to Horatio

And remember climate change proponents have been wrong before:

In over 50 years of Climate Change predictions, we have seen a dismal failure of the predictions. In an article by Mark J. Perry, 50 Years of Failed Doomsday, Eco-pocalyptic Predictions; the So-called ?experts? Are 0-50, he has noted some of the failed predictions. Below are the 50 failed doomsday, eco-pocalyptic predictions (with links):

- 1966: Oil Gone in Ten Years

- 1967: Dire Famine Forecast By 1975

- 1968: Overpopulation Will Spread Worldwide

- 1969: Everyone Will Disappear In a Cloud Of Blue Steam By 1989 (1969)

- 1969: Worldwide Plague, Overwhelming Pollution, Ecological Catastrophe, Virtual Collapse of UK by End of 20th Century

- 1970: America Subject to Water Rationing By 1974 and Food Rationing By 1980

- 1970: Decaying Pollution Will Kill all the Fish

- 1970: Ice Age By 2000

- 1970: Nitrogen buildup Will Make All Land Unusable

- 1970: Oceans Dead in a Decade, US Water Rationing by 1974, Food Rationing by 1980

- 1970: Urban Citizens Will Require Gas Masks by 1985

- 1970: World Will Use Up All its Natural Resources

- 1970s: Killer Bees!

- 1971: New Ice Age Coming By 2020 or 2030

- 1972: New Ice Age By 2070

- 1972: Oil Depleted in 20 Years

- 1972: Pending Depletion and Shortages of Gold, Tin, Oil, Natural Gas, Copper, Aluminum

- 1974: Another Ice Age?

- 1974: Ozone Depletion a ?Great Peril to Life (data and graph)

- 1974: Space Satellites Show New Ice Age Coming Fast

- 1975: The Cooling World and a Drastic Decline in Food Production

- 1976: Scientific Consensus Planet Cooling, Famines imminent

- 1977: Department of Energy Says Oil will Peak in 1990s

- 1978: No End in Sight to 30-Year Cooling Trend (additional link)

- 1980: Acid Rain Kills Life In Lakes (additional link)

- 1980: Peak Oil In 2000

- 1988: Maldive Islands will Be Underwater by 2018 (they?re not)

- 1988: Regional Droughts (that never happened) in 1990s

- 1988: Temperatures in DC Will Hit Record Highs

- 1988: World?s Leading Climate Expert Predicts Lower Manhattan Underwater by 2018

- 1989: New York City?s West Side Highway Underwater by 2019 (it?s not)

- 1989: Rising Sea Levels will Obliterate Nations if Nothing Done by 2000

- 1989: UN Warns That Entire Nations Wiped Off the Face of the Earth by 2000 From Global Warming

- 1996: Peak Oil in 2020

- 2000: Children Won?t Know what Snow Is

- 2000: Snowfalls Are Now a Thing of the Past

- 2002: Famine In 10 Years If We Don?t Give Up Eating Fish, Meat, and Dairy

- 2002: Peak Oil in 2010

- 2004: Britain will Be Siberia by 2024

- 2005 : Manhattan Underwater by 2015

- 2005: Fifty Million Climate Refugees by the Year 2020

- 2006: Super Hurricanes!

- 2008: Arctic will Be Ice Free by 2018

- 2008: Climate Genius Al Gore Predicts Ice-Free Arctic by 2013

- 2009: Climate Genius Al Gore Moves 2013 Prediction of Ice-Free Arctic to 2014

- 2009: Climate Genius Prince Charles Says we Have 96 Months to Save World

- 2009: UK Prime Minister Says 50 Days to ?Save The Planet From Catastrophe?

- 2011: Washington Post Predicted Cherry Blossoms Blooming in Winter

- 2013: Arctic Ice-Free by 2015 (additional link)

- 2014: Only 500 Days Before ?Climate Chaos?

Another good article by Michael Shellenberger, ?Why Apocalyptic Claims About Climate Change Are Wrong?, further elaborates about climate change predictions.

note - Remember, it only takes one good scientist to change everything. Albert Einstein's Annus mirabilis (a Latin phrase that means "wonderful year", "miraculous year" or "amazing year") in 1905, a patent clerk (2nd class) in Bern Switzerland (Albert Einstein) changed physics. Einstein's five physics papers ended the Classical Era of Physics and started the Modern Era of Physics. Einstein's General Theory of Relativity in 1915 overthrew over 200 years of Newton's Universal Gravity Theory. I should also mention Alfred Wegener as the originator of the theory of continental drift which was widely discounted when he proposed it, but today is a bedrock of Geology. All it takes is one good or great scientist to change science (although many good or great scientists are preferable to change science).

A Personal Note:

The incandescent light bulb has often been described as a heat source that provides some light, given that a light bulb generates more heat than it does light. In today's political debate we often find the proponents of an issue providing a lot of heat and only a little light. These observations are meant to provide illumination (light) and not argumentation (heat).

Political opponents in today's society often utilize the dialog and debate methodology of Demonize, Denigrate, and Disparage their opponent when discussing issues, policies, and personages. To demonize, denigrate, or disparage the messenger to avoid consideration of the message is not acceptable if the message has supporting evidence.

The only acceptable method of political discourse is disagreement - to be of different opinions. If you are in disagreement with someone you should be cognizant that people of good character can and often disagree with each other. The method of their disagreement is very important to achieve civil discourse. There are two ways you can disagree with someone; by criticizing their opinions or beliefs or critiquing their opinions or beliefs.

- Criticism - Disapproval expressed by pointing out faults or shortcomings

- Critique - A serious examination and judgment of something

Most people, and most commentators have forgotten the difference between Criticism and Critique. This has led to the hyper-partisanship in today's society. In a civil society critiquing a viewpoint or policy position should be encouraged. This will often allow for a fuller consideration of the issues, and perhaps a better viewpoint or policy position without invoking hyper-partisanship. We can expect that partisanship will often occur, as people of good character can and often disagree with each other. Criticizing a viewpoint or policy position will often lead to hostility, rancor, and enmity, which results in the breakdown of civil discourse and hyper-partisanship. It is fine to criticize someone for their bad or destructive behavior, but it is best to critique them for their opinions or words. We would all do better if we remember to critique someone, rather than criticize someone.

I would ask anyone who disagrees with what I have written here to please keep this disagreement civil. I am open to critique and will sometimes take criticism. I will always ignore demonization, denigration, and disparagement, or point out the vacuous nature or the character flaws of those that wish to silence the messenger rather than deal with the message.

Please remember that if you disagree with the messenger it is not acceptable to kill the messenger. You may kill the messenger, but the message will remain.

Further Readings

There are many books, articles, and websites that deal with the topic of Global Warming. As usual with a controversial topic they can be for or against, scientifically literate or illiterate, accurate or inaccurate, political or apolitical, and everything in-between. I therefore cannot recommend any specific books , articles, or websites that deal dispassionately with both sides of the topic of Global Warming.

I can, however, recommend a book and websites that critique (defined as a serious examination and judgment of something) of the science of Global warming. These books are:

- Apocalypse Never: Why Environmental Alarmism Hurts Us All by Michael Shellenberger

- False Alarm: How Climate Change Panic Costs Us Trillions, Hurts the Poor, and Fails to Fix the Planet by Bjorn Lomborg

- Lukewarming: The New Climate Science that Changes Everything" by Patrick J. Michaels & Paul C. Knappenberger

- Unsettled: What Climate Science Tells Us, What It Doesn't, and Why It Matters by Steven E. Koonin

A website and its publications that makes a scientifically reasonable critique of Global Warming science is "The Nongovernmental International Panel on Climate Change (NIPCC)" of The Heartland Institute. A few of their publications that I would recommend are:

- Climate Change Reconsidered II: Physical Science Summary for Policymakers

- Climate Change Reconsidered II: Biological Impacts Summary for Policymakers

- Climate Change Reconsidered II; Fossil Fuels Summary for Policymakers

- Why Scientists Disagree Second Edition

- Nature, Not Human Activity, Rules The Climate

A video that I would recommend is:

Other websites that that I would recommend that critiques the science of Climate Change is:

- Use Due Diligence on . . . Climate

- Cato Institute - Global Warming

- Climate Change Dispatch (CCD)

- Environmental Progress

- Pro & Cons: Is Human Activity Primarily Responsible for Global Climate Change?

- Real Climate Science

Finally, the following article is from a former believer, David Siegel, who decided to thoroughly investigate Climate Change. David Siegel is an entrepreneur, writer, investor, blockchain expert, start-up coach, founder of the Pillar project and 20|30.io. He was asked the the following questions: What is your position on the climate-change debate? What would it take to change your mind? His answer was "It would take a ton of evidence to change my mind, because my understanding is that the science is settled, and we need to get going on this important issue, that's what I thought, too. This is my story." Although this is a article is a bit long, but it is a through examination of the issues and problems surrounding Climate Change.

I would also direct you to the testimony of John R. Christy, Professor of Atmospheric Science, Alabama State Climatologist at the University of Alabama in Huntsville, to the U.S. House Committee on Science, Space & Technology on 29 Mar 2017 and his testimony to the U.S. Senate Committee on Commerce, Science, & Transportation on 8 Dec 2015.

Disclaimer

Please Note - many academics, scientist and engineers would critique what I have written here as not accurate nor through. I freely acknowledge that these critiques are correct. It was not my intentions to be accurate or through, as I am not qualified to give an accurate nor through description. My intention was to be understandable to a layperson so that they can grasp the concepts. Academics, scientists, and engineers entire education and training is based on accuracy and thoroughness, and as such, they strive for this accuracy and thoroughness. I believe it is essential for all laypersons to grasp the concepts of this paper, so they make more informed decisions on those areas of human endeavors that deal with this subject. As such, I did not strive for accuracy and thoroughness, only understandability.

Most academics, scientist, and engineers when speaking or writing for the general public (and many science writers as well) strive to be understandable to the general public. However, they often fall short on the understandability because of their commitment to accuracy and thoroughness, as well as some audience awareness factors. Their two biggest problems are accuracy and the audience knowledge of the topic.

Accuracy is a problem because academics, scientist, engineers and science writers are loath to be inaccurate. This is because they want the audience to obtain the correct information, and the possible negative repercussions amongst their colleagues and the scientific community at large if they are inaccurate. However, because modern science is complex this accuracy can, and often, leads to confusion amongst the audience.

The audience knowledge of the topic is important as most modern science is complex, with its own words, terminology, and basic concepts the audience is unfamiliar with, or they misinterpret. The audience becomes confused (even while smiling and lauding the academics, scientists, engineers or science writer), and the audience does not achieve understandability. Many times, the academics, scientists, engineers or science writer utilizes the scientific disciplines own words, terminology, and basic concepts without realizing the audience misinterpretations, or has no comprehension of these items.

It is for this reason that I place understandability as the highest priority in my writing, and I am willing to sacrifice accuracy and thoroughness to achieve understandability. There are many books, websites, and videos available that are more accurate and through. The subchapter on 'Further Readings' also contains books and websites on various subjects that can provide more accurate and thorough information. I leave it to the reader to decide if they want more accurate or through information and to seek out these books, websites, and videos for this information.

If you have any comments, concerns, critiques, or suggestions I can be reached at mwd@profitpages.com.

I will review reasoned and intellectual correspondence, and it is possible that I can change my mind,

or at least update the content of this article.